Technology Innovation

STEREO VISION PIONEERS SINCE 1997

Since 1997, we have been developing and manufacturing industry-leading 2D and 3D stereo vision sensors. Countless innovations have been made since we launched the world's first mass market stereo vision sensor. Improvements with accuracy, image quality, calibration, size, and reliability come from constant hardware and software development. Our vast experience and commitment to quality enables us to integrate industry-leading technology into products that last beyond the normal life-cycle; with many first generation people tracking and counting sensors still in use and operating today!

The evolution of our stereo vision technology since 1997 (left) to 2017 (right)

LET'S GET TECHNICAL

FLIR has made 3D stereo vision the most accurate and consistent footfall technology by developing innovative hardware and software packages that include complete stereo processing support – from image correction and alignment to dense correlation-based stereo mapping.

HOW DOES IT WORK?

3D stereo vision works in a similar way to 3D sensing in human vision. It begins with identifying image pixels that correspond to the same point in a scene observed by multiple cameras. The 3D position of a point can then be established by triangulation using a ray from each camera. The more corresponding pixels identified, the more 3D points that can be determined with a single set of images. Correlation stereo methods attempt to obtain correspondences for every pixel in the stereo image, resulting in tens of thousands of 3D values generated with every stereo image.

Each 3D sensor is equiped with 2 cameras.

This allows the sensor to calculate 3D depth.

3D STEREO IMAGING PIPELINE

HOW WE STAY ACCURATE: CALIBRATION AND RECTIFICATION

Each and every FLIR Brickstream sensor is factory-calibrated for lens distortion and sensor misalignments to ensure consistency of calibration across all sensors. During the rectification process, epipolar lines are aligned to within 0.05* pixels RMS error. Calibration and rectification are key to getting high quality disparity images from a 3D sensor and allows for consistent and accurate footfall data gathering between sensors. The sensor design is also specifically designed to protect the calibration against mechanical shock and vibration. Below is a technology workflow of the FLIR Brickstream 3D sensors for calculating 3D stereo height images.

1) RAW IMAGE

Raw images are captured from the left and right camera on the sensor. Minor lens distortion creates a barreling of the images.

2) RECTIFIED IMAGE

The pair of images are corrected to remove lens distortion and aligned horizontally to each other. Images now have straight perspective lines.

3) EDGE IMAGE

Edge enhancement is then applied to create edge images that are not biased by image brightness.

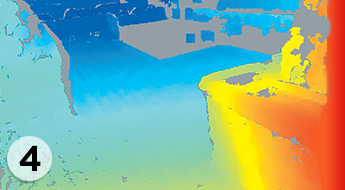

4) 3D DEPTH IMAGE

For each pixel in the right image, a corresponding pixel in the left image is obtained via correlation. (Colorized to show depth)

FLIR'S 3D VISION TECHNOLOGY DELIVERS